Laboratory 3 Exercise - Brian Mere

1

In

Proof

User 2 can read the file. As given by page 39, the same i-number is given to both files. The i-node has 2 pointers keeping track before user 1 deletes it, but since it's linked by user 2 still then it's not marked for deletion.

☐

2

Under what circumstances is multiprogramming likely to increase CPU utilization? Why?

Proof

If any process, or set of processes, are IO bound and/or can be waited on or split up, then useful work (utilization) will increase. I mean think about it. Have you ever had a situation where your keyboard wasn't synced with what you were typing on the screen because another process was blocking its progress? In this situation it'd be better to employ multiprogramming.

☐

3

Suppose a computer can execute 1 billion instructions per second and that a system call takes 1000 instructions, including the trap and the context switching. How many system calls can the computer execute per second and still have half the CPU capacity for running application code?

Proof

Assuming half CPU capacity then we have

☐

4

What is a race condition? What are the symptoms of a race condition?

Proof

A race condition is:

essentially, having different sequences of events interfering with each other is a race condition.

The symptoms of a race condition are that data between processes are incorrectly calculated. For example, if two files are reading/writing to the same file location (or they are linked) then we need to consider race conditions, as if one writes to it then those changes need to be reflected on the read of the other (and vice versa).

☐

5

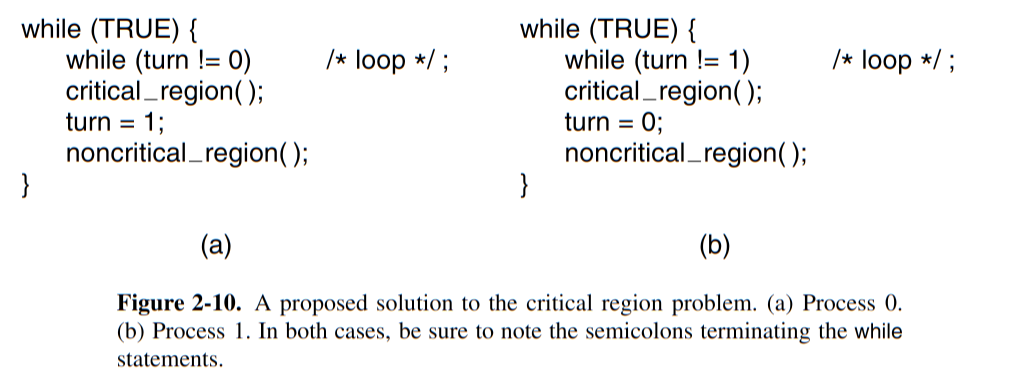

Does the busy waiting solution using the turn variable (see below) work when the two processes are running on a shared-memory multiprocessor, that is, two CPUs, sharing a common memory?

Proof

Yes there is a problem. If (a) is way slower than (b), and sets turn = 1;, then (b) enters the critical region. It is possible for (a) to then enter the noncritical_region() and pass the while(turn != 0); and then (a) would enter the critical_region() all while (b) is still in the critical_region().

☐

6

Describe how an operating system that can disable interrupts could implement semaphores. That is, what steps would have to happen in which order to implement the semaphore operations safely?

Proof

Here's a simple pseudo-code operation for our solution:

typedef sem_t unsigned int;

sem_t semaphore;

void lock()

{

lock_interrupts();

DOWN();

}

void unlock()

{

UP();

unlock_interrupts();

}

☐

7

Round robin schedulers normally maintain a list of all runnable processes, with each process occurring exactly once in the list. What would happen if a process occurred twice in the list? Can you think of any reason for allowing this? (and what is the reason).

Proof

Yes! You would want to sometimes put the process in multiple places in the list. The reason is that, often, you'd want this process to have twice as much yields than a normal thread (one with only one spot in the list). For example, you probably want to have things like IO to have more spots in that list than say some "Hello World x100" program.

☐

8

Five batch jobs,

: 10 priority 3 : 3 priority 5 : 4 priority 2 : 7 priority 1 : 6 priority 4

Whereis the highest priority. For each of the following scheduling algorithms, determine the time at which each job completes and the mean process turnaround time. Assume a 1 second quantum and ignore process switching overhead.

a: Round robin

b: Priority scheduling

c: First-come, first served (given that they arrive in alphabetical order)

d: Shortest job first

for (a) assume that the system is multiprogrammed, and that each job gets its fair share of the CPU. For (b)-(d) assume that only one job at a time runs, and each job runs until it is finished. All jobs are completely CPU bounds.

Proof

a:

1 5 10 15 20 25 30

Process order: ABCDEABCDEABCDEACDEADEADEADAAA

Process | Time | Priority | Round Robin Time

--------------------------------------------

A | 10 | 3 | 30 (last A)

B | 3 | 5 | 12 (last B)

C | 4 | 2 | 17 (last C)

D | 7 | 1 | 27 (last D)

E | 6 | 4 | 25 (last E)

For the mean turnaround time it's:

b:

Here the processes are in order, so then:

1 5 10 15 20 25 30

Process order: BBBEEEEEEAAAAAAAAAACCCCDDDDDDD

Process | Time | Priority | Priority Time

--------------------------------------------

A | 10 | 3 | 19

B | 3 | 5 | 3

C | 4 | 2 | 23

D | 7 | 1 | 30

E | 6 | 4 | 9

c:

1 5 10 15 20 25 30

Process order: AAAAAAAAAABBBCCCCDDDDDDDEEEEEE

Process | Time | Priority | First-come Time

--------------------------------------------

A | 10 | 3 | 10

B | 3 | 5 | 13

C | 4 | 2 | 17

D | 7 | 1 | 24

E | 6 | 4 | 30

d:

1 5 10 15 20 25 30

Process order: BBBCCCCEEEEEEDDDDDDDAAAAAAAAAA

Process | Time | Priority | Round Robin Time

--------------------------------------------

A | 10 | 3 | 30

B | 3 | 5 | 3

C | 4 | 2 | 7

D | 7 | 1 | 20

E | 6 | 4 | 13

☐

9

Re-do Laboratory 3 Exercise - Brian Mere#8 (a) with the modification that job

Proof

n| Process Order | End Time

---------------------------

1| A B C D E | 4.5

2| A B C D E | 9

3| A B C D E | 13.5

4| A B C D E | 17

5| A C D E | 19.5

6| A D E | 22

7| A D | 23.5

8| A D | 25

9| A D | 26.5

A| A D | 28

B| D | 29.5

B| D | 31

B| D | 32.5

B| D | 34

This gives ending times per process of:

Process | Time | Priority | End Time

--------------------------------------------

A | 10 | 3 | 27.5

B | 3 | 5 | 11

C | 4 | 2 | 15.5

D | 7 | 1 | 34

E | 6 | 4 | 22

Giving a mean execution time of:

☐

10

A CPU-bound process running on CTSS needs 30 quanta to complete. How many times must

it be swapped in, including the first time (before it has run at all)? Assume that there are

always other runnable jobs and that the number of priority classes is unlimited.

Proof

Every-time we swap we double the amount of quanta (setting 1 quanta when swapping in) so then we have 1 quanta for 1 swap, 2 additional quanta for 2 swaps, and so on. Mathematically, this means:

where

for our quanta time

☐