Suppose is a self-adjoint operator on a finite-dimensional inner product space and are the only eigenvalues of . Then:

Proof

Notice:

Let be applied to these. Since is a finite-dimensional inner product space, we have to consider if is a complex or a real vector space.

For a complex vector space, using the Spectral Theorem yields that, since is self-adjoint and thus normal, that has an orthonormal basis consisting of eigenvectors of . Notice that since are some eigenvalues, then their corresponding eigenvectors compose this basis. Notice since these are the only eigenvalues of , then is the only valid orthonormal eigenbasis for . As such, any is spanned via:

so then:

where the 's come from the fact that and . Thus, since was arbitrary, then .

For a real vector space, the same process applies since is adjoint, so using the Real Spectral Theorem gives that has the same orthonormal eigenbasis.

☐

3

Theorem

Give an example of an operator such that and are the only eigenvalues of while .

Proof

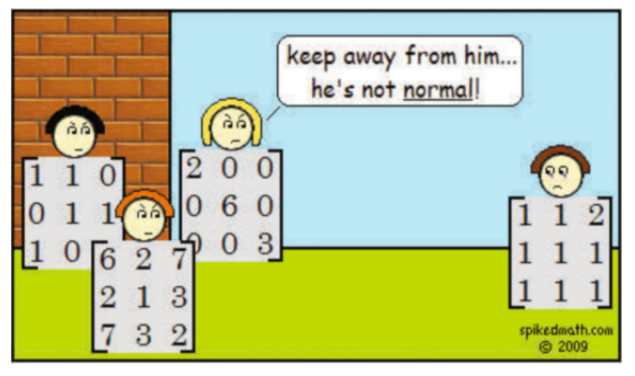

As the previous theorem implies, we should construct to not be self-adjoint. As such, define by it's actions on the standard basis, given by the matrix:

Notice that there the only eigenvalues here are and . We can see this because there are only the eigenvectors:

Now notice that:

Thus .

☐

6

Theorem

A normal operator on a complex inner product space is self-adjoint iff all its eigenvalues are real.

Proof

Consider normal operator where is a complex inner product space. Then by the Complex Spectral Theorem, then has an orthonormal basis consisting of eigenvectors in .

For the other direction, suppose that only has real eigenvalues with corresponding eigenvectors . We must have of them because we have an orthonormal basis of eigenvectors by (see previous paragraphs). And since only has real eigenvalues, then have only real corresponding eigenvalues.

Where the last step is from the fact that and even though we know that regardless. Thus, we must have it that is self-adjoint as a result, since was an arbitrary choice.

☐

7

Theorem

Suppose is a complex inner product space and is a normal operator such that . Then is self-adjoint and .

Proof

Since is normal, then has an orthonormal basis consisting of eigenvectors of , namely where each has corresponding eigenvalue (we don't know yet if is self-adjoint, so we'll assume they 's are all complex for now). Suppose .

Via the complex spectral theorem then since is normal then has a diagonal matrix with respect to our orthonormal basis:

since :

Thus each . As a result, then factoring out gives that as possibilities. As such, then all so then must be self-adjoint. Furthermore, then notice that for all 's that if then so . The same is said if . Hence, the following is true:

Thus .

☐

8

Theorem

Give an example of an operator on a complex vector space such that but .

Proof

We need to just give an operator that isn't normal, mainly one that isn't invertible. As such, have be defined by actions on whatever basis of (which is dimensional). For simplicity, let and . Choose such that:

Notice that:

While:

☐

9

Theorem

Suppose is a complex inner product space. Then every normal operator on has a square root. Namely, every normal operator has an operator where .

Proof

Since is a complex inner product space and is an arbitrary normal operator, then by the Complex Spectral Theorem then has a diagonal matrix with respect to some orthonormal basis of . Namely:

where . Choose to be where:

Notice that:

Thus .

☐

10

Theorem

Give an example of a real inner product space and and real numbers with such that is not invertible.

Proof

As the hint suggests, we should try to have not be self-adjoint. Namely, via the proof of Chapter 7 - Operators on Inner Product Spaces#^94dc7b, we should try to find some such that . Have and . Define where is the standard basis by:

\begin{align}

\mathcal{M}(T^2 + bT + cI, \beta) &= \begin{bmatrix}

0 & -1 \1 & 0

\end{bmatrix}^2 + b\begin{bmatrix}

0 & -1 \1 & 0

\end{bmatrix} + c \begin{bmatrix}

1 & 0 \

0 & 1

\end{bmatrix}\

&= \begin{bmatrix}

-1 & 0 \

0 & -1

\end{bmatrix} + \begin{bmatrix}

b & b \

0 & b

\end{bmatrix} + \begin{bmatrix}

c & 0 \

0 & c

\end{bmatrix}\

&= \begin{bmatrix}

-1 + b + c & b \

0 & -1 + b + c

\end{bmatrix}

\end

You can't use 'macro parameter character #' in math modeWhich is not invertible as it's the zero map. ☐ ### 11 > [!theorem] > Every self-adjoint operator on $V$ has a cube root, an operator $S \in \mathcal{L}(V)$ such that $S^3 = T$. *Proof* If $T \in \mathcal{L}(V)$ is a self-adjoint operator on $V$, then by either the Real or Complex Spectral Theorem, then $T$ has a diagonal matrix with respect to some orthonormal basis of $V$, called $\beta = \{v_1, ..., v_n\}$. As a result:

You can't use 'macro parameter character #' in math modeThus $S^3 = T$ as desired. ☐ ### 14 > [!theorem] > Suppose $U$ is a finite-dimensional real vector space and $T \in \mathcal{L}(U)$. Then $U$ has a basis consisting of eigenvectors of $T$ iff there is an inner product on $U$ that makes $T$ into a self-adjoint operator. *Proof* $(\rightarrow)$: Suppose $U$ has a basis $u_1, ..., u_m$ consisting of eigenvectors of $T$. Thus $Tu_i = \lambda_iu_i$ for all $i \in \{1, ..., m\}$ where $\lambda_i \in \mathbb{C}$. Create the inner product $\braket{\cdot , \cdot}_U$ such that:

You can't use 'macro parameter character #' in math mode Thus, notice that this forces all $u_1, ..., u_m$ to be specifically an *orthonormal* eigenbasis for $U$ (as the basis vectors are eigenvectors). Thus, using the Real Spectral Theorem, then $T$ must be self-adjoint. $(\leftarrow)$: Suppose there exists an inner product on $U$, denoted $\braket{\cdot, \cdot}_U$, that makes $T$ a self-adjoint operator. Since $U$ is a real vector space and $T$ is self-adjoint, then by the Real Spectral Theorem, then $U$ has an orthonormal basis $u_1, ..., u_m$ consisting of eigenvectors of $T$. ☐ ### 15 > [!theorem] > Find the matrix entry below that is covered up. >  *Proof* Let: